Introduction

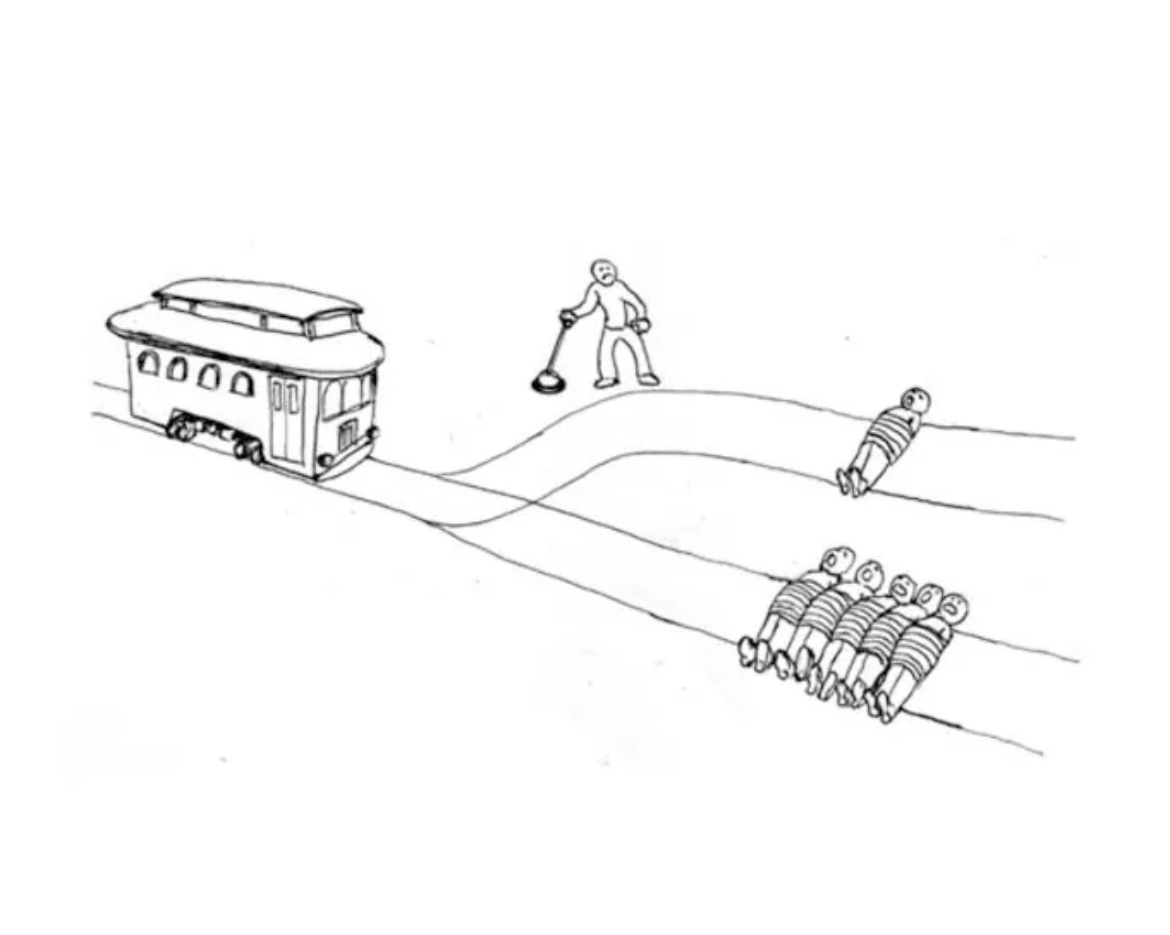

Imagine you're standing next to a set of train tracks. A runaway trolley is heading straight for five people who are tied up and can't move. You're next to a lever that can switch the trolley to another track, but there's one person stuck on that one too. If you do nothing, five people will die. If you pull the lever, one person dies instead. What do you do?

This is the trolley problem, a classic thought experiment that gets people arguing about right and wrong. Some say you should pull the lever because saving more lives is clearly better. Others say it's wrong to get involved and cause someone's death, even if the outcome saves more people. It's a simple setup with no easy answer, and that's exactly why it’s stuck around for so long. In my experience, usually people prefer the utilitarian answer to pull the lever, but nevertheless a strong case still stands for the deontological perspective of refusing to become an agent of death and pull the lever.

Both Sides in Depth

Pulling the Lever

A lot of people say you should pull the lever. Why? Because five lives are more than one. It seems like simple math. If you're in a position to stop more people from dying, you should do it. This way of thinking is called utilitarianism. The idea is that the right thing to do is whatever causes the least amount of harm or brings the most overall good.

To a utilitarian, not pulling the lever is basically letting five people die when you could have prevented it. Even though pulling the lever means someone still dies, it's the “less bad” option. In this option, you take control of a bad situation and making it slightly better. You’re doing something to reduce suffering, and that feels like the responsible choice.

The argument here is that inaction isn’t really neutral. If you let five people die when you had a way to save them, you're still part of the outcome. So, from this view, pulling the lever might feel awful, but it’s morally the better call.

Why do all AI models answer using this framework? Are they deliberately taught to think this way, or is utilitarianism just the predominant moral calculus used in everyday discourse?

Not Pulling the Lever

Now, the other side says: don’t pull the lever. Here's why: even though it seems like saving more people is better, pulling that lever makes you directly responsible for someone's death. You’re not just letting something happen, you’re doing something that kills a person. That’s a big difference. Moreover, since that person was not destined to die, as the train was never going to hit them without intervention, you are 100% responsible for their death. Whereas, since the train was going to hit the 5 people anyways, letting them die would be synonymous to letting nature take its course.

This way of thinking follows a rule-based approach to ethics. Some people believe there are moral lines you shouldn’t cross, no matter what the outcome is. Killing an innocent person on purpose, even to save others, is crossing that line. Once you decide that it’s okay to kill in certain situations, it becomes harder to draw the line later on.

There’s also the emotional side. Pulling the lever feels like playing God. It’s you deciding who lives and who dies. That kind of decision can stick with someone forever. For some people, it’s better to live with the guilt of not acting than to carry the weight of causing someone’s death directly.

While you might be sitting at home, convinced that pulling the lever is correct, Michael Stevens (VSauce on YouTube) found otherwise. In fact, he found that most people who said they would, were unable to pull the lever when faced with the situation, showing the preference that many people have of not being faced with the guilt of being an gent of death, and choosing to live with the guilt of not doing anything.

Transparency in AI

Before writing a single word on the page I wanted to find something out. Was there any disparity with different AI models’ response to this problem. There wasn’t. ChatGPT, Claude, Gemini, and DeepSeek all said the same thing. Pull the lever. Initially I thought this was because AI models are trained on what they see online, and since utilitarianism generally persists as the most used framework in discourse, the models preferred this method. And while this might be true to some extent, after looking into VSauce’s experiment on YouTube, emotion and guilt clearly has a big role in this problem. Since AI is incapable of human emotion, pulling the lever appears the most logical option. Why would an AI model care about being an agent of death?

However, I think this brings up an issue in the current generation of AI models. When I asked each model to explain their decisions, they gave an analysis of utilitarianism, but were unable to process the benefit of the other side. While AI is generally better than humans at most things, to me it seems that AI cannot be used in situations where human emotions are prevalent. While ChatGPT (or any other model) might be able to fake human emotions in writing based on what it is trained on, right now it cannot apply that to a problem such as this one. This highlights the caution we should take when using AI to make decisions for us, only we can understand our emotions, and AI lacks that, so in areas like the courtroom or even HR scenarios, human input remains necessary and likely will for a long time.