Navigating the Ethical Landscape of AI: Biases

We all make biases, whether subconscious or not.

Biases:

Introduction

Imagine this: You’re using an AI-driven app to get job recommendations. The app, as expected, suggests jobs and roles tailored to your profile, but you start to notice that some of the suggestions seem to reinforce traditional gender roles. As this happens, you wonder, "Why is this happening?" This scenario details a critical issue in AI, ethics. As AI systems gain more presence in our daily lives and influence over our daily lives, understanding and addressing ethical considerations is a must. And I assure you, I’m not just trying to fear monger for content, this has really happened.

From Bloomberg Law: “The US Equal Employment Opportunity Commission (EEOC) settled its first-ever AI discrimination in hiring lawsuit for $365,000 with a tutoring company that allegedly programmed its recruitment software to automatically reject older applicants.”

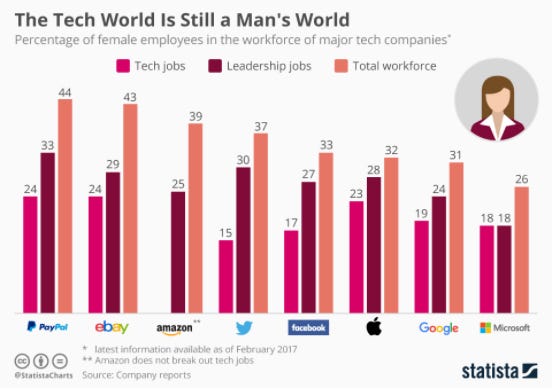

Shortly after, Amazon found that one of their hiring algorithms was denying women who applied for technical jobs at a higher rate. ACLU explains this well, saying: “It shouldn’t surprise us at all that the tool developed this kind of bias. The existing pool of Amazon software engineers is overwhelmingly male, and the new software was fed data about those engineers’ resumes. If you simply ask software to discover other resumes that look like the resumes in a “training” data set, reproducing the demographics of the existing workforce is virtually guaranteed.”

If you want to read more about this, I highly recommend checking out this article from Reuters.

Albeit relevant, it’s not just about job recommendations or hiring. The more freedom we give to AI, or the more jobs we try to automate with AI, the worse the consequences of AI bias can become.

Consider the case of AI algorithms being used in criminal justice systems to determine sentencing or parole decisions. If these algorithms are biased, they can disproportionately affect certain groups, leading to longer sentences or harsher penalties for minorities. In healthcare, AI biases can result in misdiagnoses or inadequate treatment recommendations for certain populations, jeopardizing lives. For more on this, see Yale Medicine. In the financial sector, AI-driven credit scoring systems can deny loans, unjustly, to people based on biases formed by incorrect data interpretations, heightening economic inequality.

So how do we fix this?

Well, I’m glad you asked :)

Side note: If you’re enjoying this post so far, please consider subscribing, it’s free and helps grow my blog:

Breaking down the problem

To start, it is essential that we break down AI bias.

What are some of the common reasons for AI bias?

We dabbled in the idea of biased data in the introduction, but that serves as only one example. It’s also important to note that more than one of these design flaws will be present in an AI model.

Biased Training Data:

AI systems learn from data. If the data used to train these systems contains biases, the AI will most likely replicate those biases.

Imbalanced Datasets:

When the training data is not representative of the entire population, the AI model can face challenges when dealing with underrepresented groups.

Algorithmic Bias:

Sometimes, the algorithms themselves can introduce bias. Certain machine learning models might inadvertently give more weight to certain features that correlate with sensitive attributes like race or gender.

Human Error:

The biases of the, human, developers can unintentionally or intentionally seep into the AI systems they create. Decisions about which data to use, which variables to consider, and how to interpret outcomes can all introduce bias.

Approaching Biases

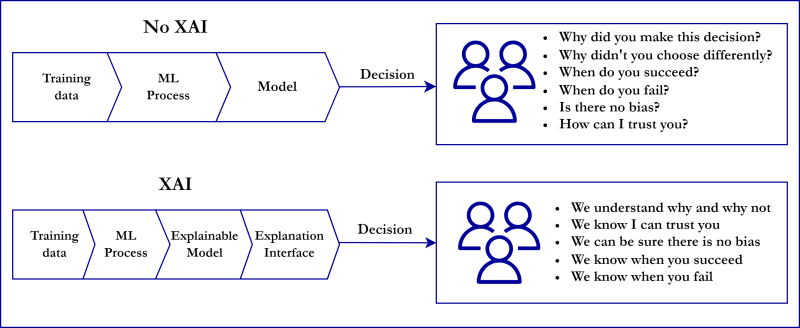

Transparency Tools: Developing tools that make AI decision-making processes more transparent and understandable can help users trust AI systems. Explainable AI (XAI) is a field focused on creating AI whose actions can be easily understood by humans. An example of this would be asking an AI for its “thought” process, and it telling you how it came to such a conclusion.

Bias Audits and Fairness Metrics: Regularly auditing AI systems for bias and developing metrics, such as transparency tools, to measure fairness can help identify and correct biases. This can be scrutinizing training data, algorithms, and/or outputs to ensure a fair treatment for all user groups.

Thanks for reading! Stay tuned for my next post! If you enjoyed this post, please don’t forget to like and subscribe!