Generative AI and Copyright Law

What happens when we run out of material to train our models?

Introduction

Copyright law is one of the most confusing and debated areas of the legal system. For example, in patent law, Section 101 of the Patent Act says that an invention must fall into one of four categories to qualify for a patent:

A process

A machine

A manufactured item, or

A composition of matter (like a chemical compound)

It also has to be “new and useful.” But what counts as “useful”? And how do you decide if something is just a minor tweak or a meaningful improvement?

Now let’s talk about copyrights. The big debate right now is whether generative AI models can legally use copyrighted materials for training under the ‘fair use’ exception. The problem is that ‘fair use’ is vague and depends on the situation.

Here’s the issue: AI companies say that training their models on copyrighted works qualifies as fair use. This means they don’t think they need permission or have to pay the creators.

Example: If your teacher photocopies a paragraph from a book for a class, it’s probably fine under fair use. But if they copied the whole book, it wouldn’t be.

AI companies claim their use is “transformative,” which is one of the key factors in fair use. But how much material can they use before it stops being fair? Where do we draw the line? Why should we draw the line?

This question has huge implications for creators, AI companies, and the future of copyright law.

Question

Should we force AI companies to obtain a license for every work they use to train their models?

If you’re reading this and it sounds really debatey, that’s probably because it is. This is one of this years most common arguments for the ‘24 - ’25policy debate topic. Which I encourage you to look up, it’s pretty interesting.

Without going into the super low probability extinction impacts. I do think at some point it does become necessary to start paying for licenses, albeit not one for every individual work. Second, I do believe at some point licenses will become required as more people become aware of the fact. In its implementation I think a solution (vaguely outlined here) is that there should be a common license for a certain group of works. Ie. a database of scientific papers, another for journalism, etc.. Though the implementation has its own issues, I have diverged long enough from the main point of this blog.

The Issue

Model Collapse = a phenomenon in artificial intelligence (AI) where generative models degrade over time. This happens when models are trained on AI-generated data, which can lead to a loss of information and a decline in performance.

Model collapse can lead to AI hallucination and mass data poisoning

The argument is as follows:

AI is trained by taking a ton of text, spotting patterns, and trying to mimic them. Along the way, it’s bound to pick up stuff humans didn’t intend or miss things it should’ve caught. That’s pretty normal, expected, and probably accounted for somewhere in the process or code.

But here’s the catch: what happens if we train new AIs using text that was created by older AIs? It’s like a game of telephone but with machines. The first AI, trained on human-written stuff, does a decent job but makes a few mistakes. Then the second AI, trained on the first one, picks up those mistakes and amplifies them. The third AI does the same thing—only now it’s working with even bigger errors. This process, of magnifying the errors through every iteration, is called model collapse, keeps snowballing until you’ve got AIs spewing nonsense.

That makes hallucinations (when AI makes up facts or credits the wrong sources) more likely. It also ramps up the risk of data poisoning, where bad info sneaks in and throws off the AI’s conclusions. It’s a spiral of messy, broken results. Since I promised not to get into the big, fear-mongering impacts, I encourage you to look into the implications of AI hallucinations and data poisioning.

The Link. How is this all related?

How will model collapse happen? This ‘will’ (I put this in quotations because nothing is 100% certain) happen because journals and creatives will get defunded due to AI companies claim ‘fair use’ over works, this leads to a deficit in the amount of data needed to train these models. Leading to these models training on AI generated content. *AI generated content is said to become the majority of journalism and creatives as original human work is not rewarded differently than AI generated works and is, if anything, more time consuming.

“The problem with AI-generated content is that it doesn’t provide new insights. AI tools can’t create new perspectives, which means they just repeat already existing information.” (WebFX)

There would be no new data to train the models on if AI trains itself on AI generated works.

This is the reason why licensing is being proposed.

Licensing ‘solves’ by rewarding human works differently and prevents model collapse through internet scraping (explained further in the A ‘Solution’? section of the blog if unclear) and through the erosion of human generated journalism. By adhering to licensing agreements, AI developers can scrape data ethically and legally from the internet, reducing risks associated with using low-quality or unauthorized content. Reducing the risk of AI models using low quality AI generated articles to train themselves.

Examples

Here some examples of exactly this (the images are linked if you want to see the original source):

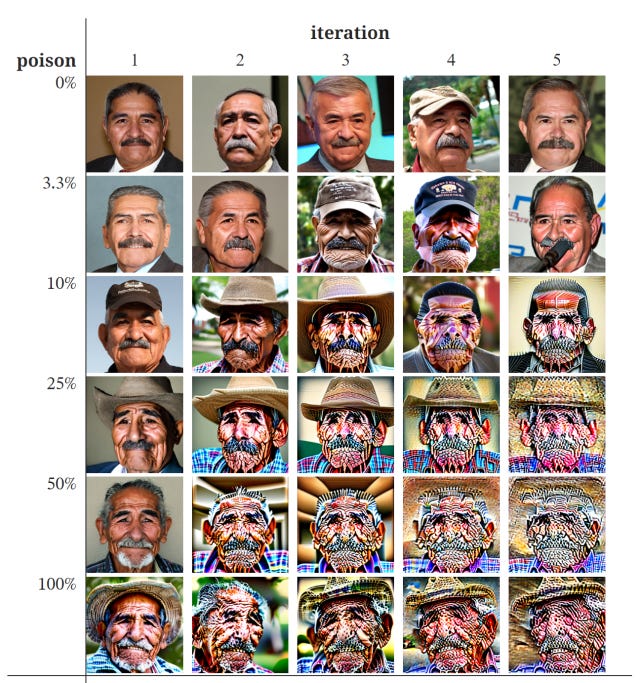

Even at 3.3% poisoning (iteration 3+) faces become unrecognizable. This if anything, should show the urgency of this problem to the Gen AI industry.

Here’s another example:

That’s not even a dog at the end, looks more like a cookie to be honest.

A ‘Solution’?

So how do we solve this? Can we solve this? I mean we kind of need to if we want to prevent model collapse and the reduction of the Gen AI industry to gibberish.

For starters, some companies are already partnering with Gen AI companies to produce works that AI can be trained on, with a license of course. But the biggest issue is preventing model collapse through internet scraping. As that sticks out to me as the most likely way for this scenario to occur. As there is an increase in AI generated works on the internet, one can only come to the conclusion that models scraping the internet are effectively training themselves on an increased amount of AI generated data.

If you’re finding this interesting, please consider subscribing!

I think no solution can be summarized in one post, or even articulated at all. To provide a broad umbrella solution, I think the answer is rewarding human creativity through licensing or some other way. Moreover, I think internet scraping needs to either be outlawed amongst AI models; where AI companies only work with a set database of works. However, given that AI models need an astronomical amount of data to be trained properly, there has to be some way to identify AI generated works; especially when people try to pass it off as their own.